Shopify announced the launch of YouTube shopping this week, outlining benefits including:

- Customers can buy products right when they discover them

- Instantly sync products to YouTube

- Create authentic live shopping experiences

- Sell on YouTube, manage on Shopify

What does this mean for our clients?

There are some eligibility restrictions for this product at the moment. You must already have 1000 subscribers to your YouTube channel and at least 4000 hours of annual watch time. This means as a brand, you will need to have an already well-established YouTube channel or look to start working with content creators who do.

Consider content creators who align with your brand or category and research their channels and content. There are specific websites and agencies that can help source content creators for a fee, including theright.fit and hypeauditor.com

YOUTUBE FOR ACTION WITH PRODUCT FEEDS

For clients who don’t meet the eligibility requirements, but still want to explore video for retail, there is another option. YouTube for action campaigns allow us to promote videos on the YouTube network, and attach a product feed through the merchant centre, creating a virtual shop front for the watcher, with an easy “shop now” functionality.

This powerful format allows brands to generate both awareness and engagement with their brand, whilst also driving bottom line sales. This can be managed through your Google Ads account allowing you to optimise towards the same conversions and use the same audience signals as your other Google campaigns.

What is YouTube for Action?

Previously named TrueView for Action, this product allows users to buy video ads on the YouTube network which are optimised towards a performance goal rather than pure reach or video views.

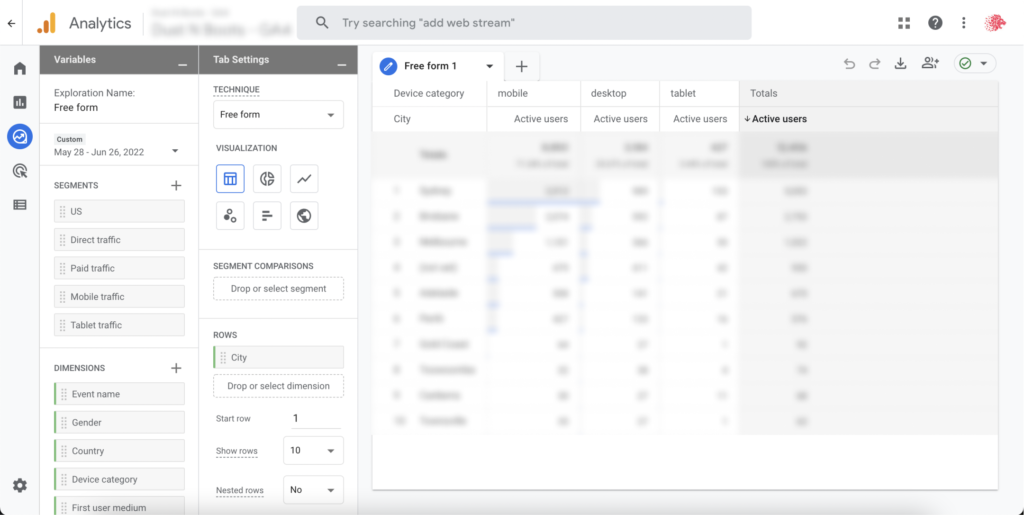

You can optimise towards:

- Website traffic

- Leads

- Sales/Purchases

And have the option to choose your bud strategy based on:

- Cost per View

- Cost per Action

- Maximise Conversions

- Cost per thousand impressions

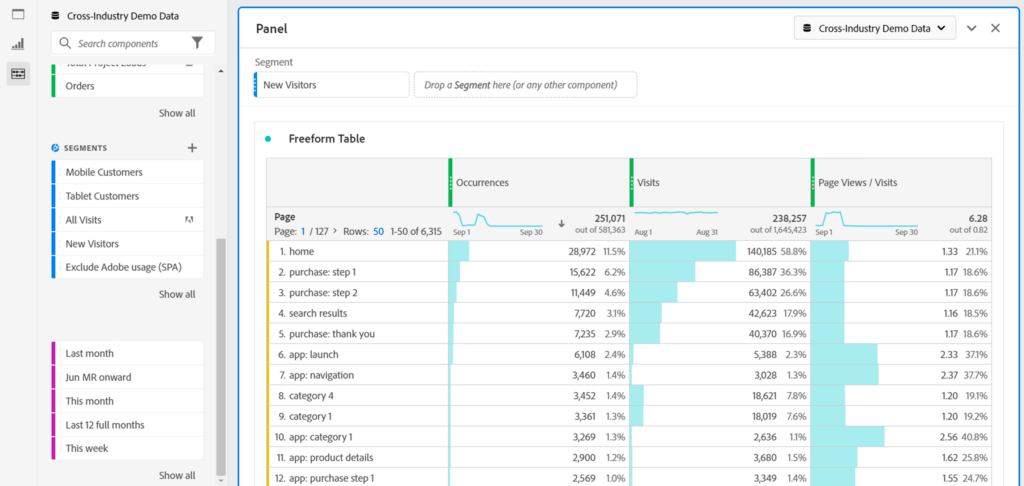

Who can I target?

YouTube and Google’s shared data provide a wealth of information to help us build audience segments that will fit your brand and services. The options include but are not limited to:

- Demographic targeting: Age, gender, location – based on signed-in user data

- Affinity audiences: Pre-defined interest/hobby and behavioural data based on users browsing history

- In-market audiences: Users deemed to be “in-market” for a product or service based on their searching behaviour and browsing history

- Life-Events: Based on what a user is actively researching or planning, e.g. graduation, retirement etc

- Topics: Align your content with similar themes to video content on the YouTube network

- Placement: Align your content to specific YouTube channels, specific websites, or content on channels/websites.

- Keyword: Similarly, to search, build portfolios of keywords to target specific themes on YouTube

The team at LION will work with you to select and define the right audiences to test and optimise to get the best results.

What content should I use?

Like any piece of content, there is no right or wrong answer, and what works for some brands may not for others. Your video should align with your brand tone of voice and guidelines.

Think about what action you want the users to take and ensure the video aligns with this, e.g. if you want users to buy a specific product, show the product in the video and talk about its benefits. Testing multiple types of video content is the best way to learn about what your potential customers like and do not like.

What do I need to get started?

- At least one video uploaded to YouTube (we recommend 30 seconds in length)

- A Google merchant centre account & Google Ads account

- A testing budget of at least $1,000

YOU CAN CHAT WITH THE TEAM AT LION DIGITAL AND WE CAN HELP YOU TO SELECT AND DEFINE THE RIGHT AUDIENCES TO TEST AND OPTIMISE TO GET THE BEST RESULTS

LION stands for Leaders In Our Niche. We pride ourselves on being true specialists in each eCommerce Marketing Channel. LION Digital has a team of certified experts and the head of the department with 10+ years of experience in eCommerce and SEM. We follow an ROI-focused approach in paid search backed by seamless coordination and detailed reporting, thus helping our clients meet their goals.

GET IN CONTACT TODAY AND LET OUR TEAM OF ECOMMERCE SPECIALISTS SET YOU ON THE ROAD TO ACHIEVING ELITE DIGITAL EXPERIENCES AND GROWTH

Contact Us

Leonidas Comino – Founder & CEO

Leo is a, Deloitte award winning and Forbes published digital business builder with over a decade of success in the industry working with market-leading brands.

Like what we do? Come work with us